Why balanced research methods build better products

When deciding which direction your product should take, you’re often juggling multiple factors. Typically a balance between business needs, user needs, and inevitably personal opinion. Ideally, opinions should help form the foundations of an idea, not validate a direction or solution on their own.

At Hyperact, one of our five core beliefs is that evidence should always outweigh opinion. We advocate for using data, user research, and real-world experimentation to guide decisions, not HiPPOs (the Highest Paid Person’s Opinions). This evidence-led approach often stems from experience working in modern product teams, where learning and iteration are part of the culture.

It’s usually the team members closest to the product, embedded within or working across product teams, who already understand this value and seek answers from data. But it often falls to those same teams to manage the scepticism of senior leaders who remain unconvinced about the value of speaking to users.

With that in mind there are two clear outcomes in this scenario. Your goals are twofold, validate internal assumptions and win support from the wider organisation.

In my experience the best way to get to both of those outcomes is to blend both quantitative and qualitative research methods to build a full picture and provide rounded evidence to move forward with confidence.

Let’s dig into these approaches and discuss what value they provide.

Quantitative options

Quantitative research is a method of gathering measurable data that can be analysed using statistical techniques. It focuses on answering questions like “how many?”, “how often?”, or “to what extent?” The goal is to identify patterns, quantify behaviours or opinions, and produce insights that are scalable and generalisable across larger groups.

Statistical analysis of existing data is one example of quant. If you’re lucky enough to have confidence in your product tagging you’re likely to have access to existing data in various guises. This is a powerful tool to raise any product concerns and tends to be used as a trigger for a business case to move into a discovery rather than an evaluative research method itself.

On site experiments (AB testing / MVT testing) is another quantitative research method, but tends to be used to further validate tactical UX assumptions in very specific parts of areas of your product rather than drive the outcomes of a discovery.

This brings us to surveying. A survey tends to be relatively low effort to plan and release. The feedback can be either gathered from within your product, such as an on site survey with tools such as Hotjar, or send out via communication channels such as emailing your user base. You can even survey from within a business but be warned, you are likely not representing the user base so hard biases will be baked in, and the volume you’ll get back isn’t likely to be statistically significant.

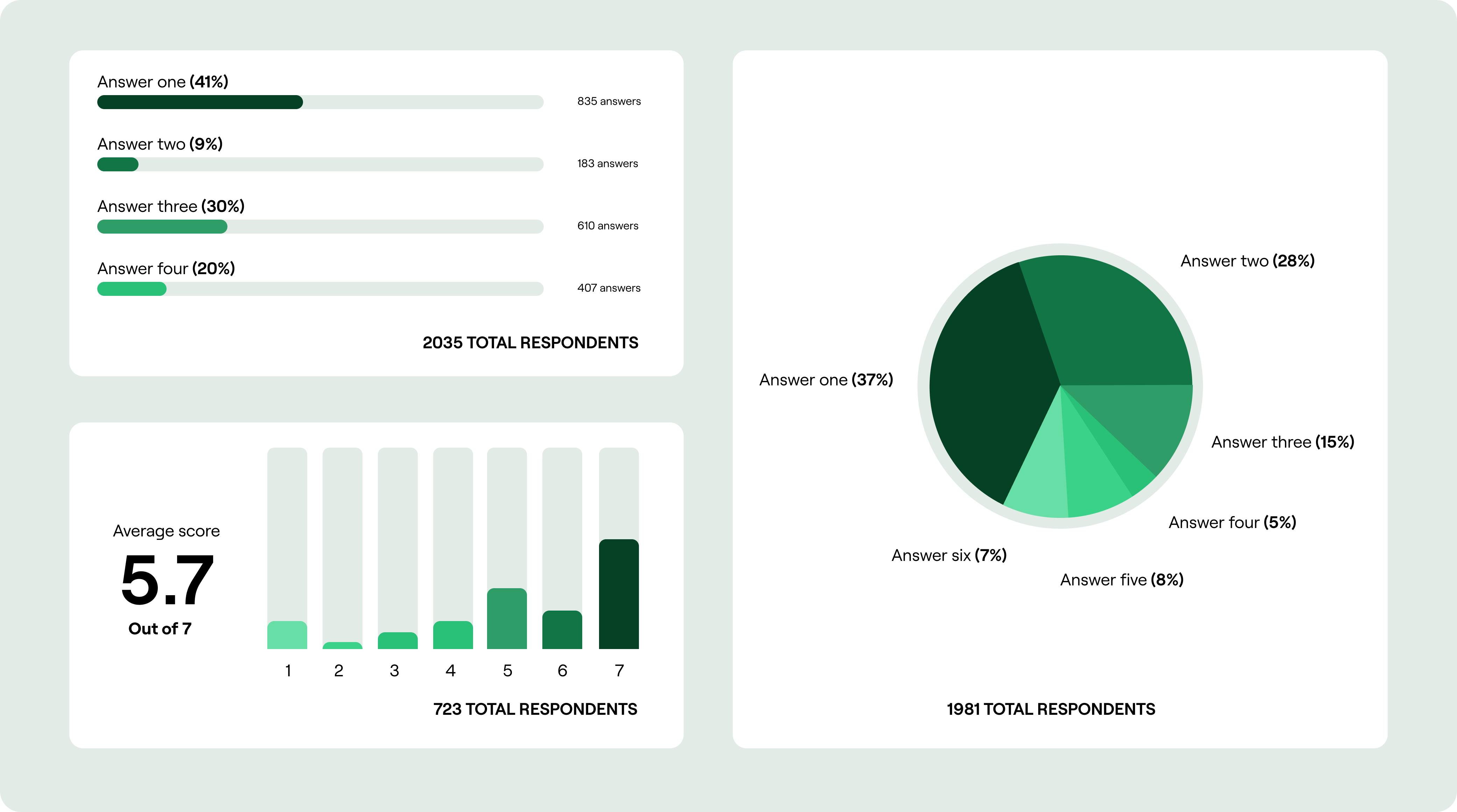

The ideal outcome of using a survey is to get a high volume of responses so your data represents a realistic spread of personas and use cases.

A good survey response rate generally falls between 20-30%, with anything above 30% considered excellent. For smaller populations, surveying all members or aiming for a 10% sample (up to 1,000) is recommended. A minimum of 100 responses is often suggested for meaningful results but the more you can bump this up, the more confidence the you can have in the data.

The core of the survey should be binary radio or tick boxes to make the analysis directly quantifiable. You can pepper this with qualitative questions such as ‘Is there anything else you’d like to see here?’ which can be turned into quant using affinity sorting.

Considerations with this method:

- If you’re gathering survey data from within your product you’re likely to have to get budget for the survey tooling, though this doesn’t have to cost a lot.

- You’ll also need to consider the disruption an on site survey may cause. This is a balance between gathering useful data as quickly as possible and not impacting your business goals. As an example a post purchase on site survey causes minimal disruption.

- If you’re reaching out to your existing customer base, do you have consent to do so via their opt in preferences. Make sure you segment your email list based on this.

- If your business is large enough to have a marketing function, you’re likely to have to work with them to plan and publish this type of feedback.

- You’re likely to get more feedback quickly if you incentivise the completion of the survey. A ‘prize draw’ with an Amazon voucher tends to be enough to get a good response. Again check with your internal teams that this is feasible.

The outcomes and outputs:

This will result in data that is communicable with cold hard numbers and percentages. This format tends to lay the foundations to agree with enough confidence which direction to travel.

When you play this data back to your peers and business leaders, make sure to surface the sample sizes alongside to maximise trust in your data.

Qualitative options

Qualitative research is a method of exploring people’s behaviours, experiences, and motivations through non-numerical data. It focuses on understanding the “why” and “how” behind actions and decisions. The goal is to uncover deep insights, reveal patterns in thought or emotion, and provide context that helps explain the numbers behind the quantitative findings. As mentioned when discussing surveys, you can use these to collect some open ended questions to feed your qualitative insights, but in my experience these tend to be rushed, surface level and not particularly insightful.

This is where interviews come in. On their own these can be dismissed as ‘an opinion of someone who doesn’t know as much about our industry as I do’ by HiPPOs, but combined with quantitative insights these are a powerful emotional accompaniment to help us define the direction our discovery should take.

Within this method there are a few variations. Moderated interviewing is a real time conversation between someone in your business and a prospective or existing customer. This method is likely to require the most effort to plan, run and analyse, but also provide the best quality insights.

You can steer a conversation if it veers off course, or equally explore an avenue previously unplanned should it arise and seem useful.

Unmoderated interviewing involves sending a predefined set of questions and recording the open responses of a prospective or existing customer thinking out loud. This is less impactful on the time of those involved in the research, but equally the quality tends to be lower as you do not get a chance to prompt or steer.

Statistical significance isn’t the goal with interviews. A good rule of thumb is 5–8 participants per key user group.

If you have the luxury to do so, continue until insights become repetitive.

If you’re going to recruit and conduct the interviews online, tooling can be more pricey than survey tooling, but if you can get some temporary budget to prove value for this, you’re likely to get buy-in to establish a more ongoing dual track discovery budget.

You could also perform your interviews in a more guerrilla way, chatting to prospective or existing users in person at an event or venue that is relevant to your user.

Considerations with this method:

- You need to consider how you’ll recruit your participants and ensure that you have a representative spread of your user groups. You could recruit via your chosen interviewing tool, via customer emailing, or via an external user recruitment agency.

- Create a rough script for your interview facilitator of key points to hit but allow yourself to go off script if you spot value.

- Just like with the surveys you’ll likely need to engage with internal teams to make sure you’re contacting users and incentivising conversations in the correct way as well as receiving consent to record the conversations.

- Expect drop outs. If you book in ten 30 minute chats, it is inevitable at least a few won’t show. You should plan for this when opening up any booking slots.

- Make sure you’re able to access a recording of your interviews easily in order to create snippets and insights. If you’re doing some guerrilla testing this could be as simple as recording it using your mobile voice notes.

The outcomes and outputs:

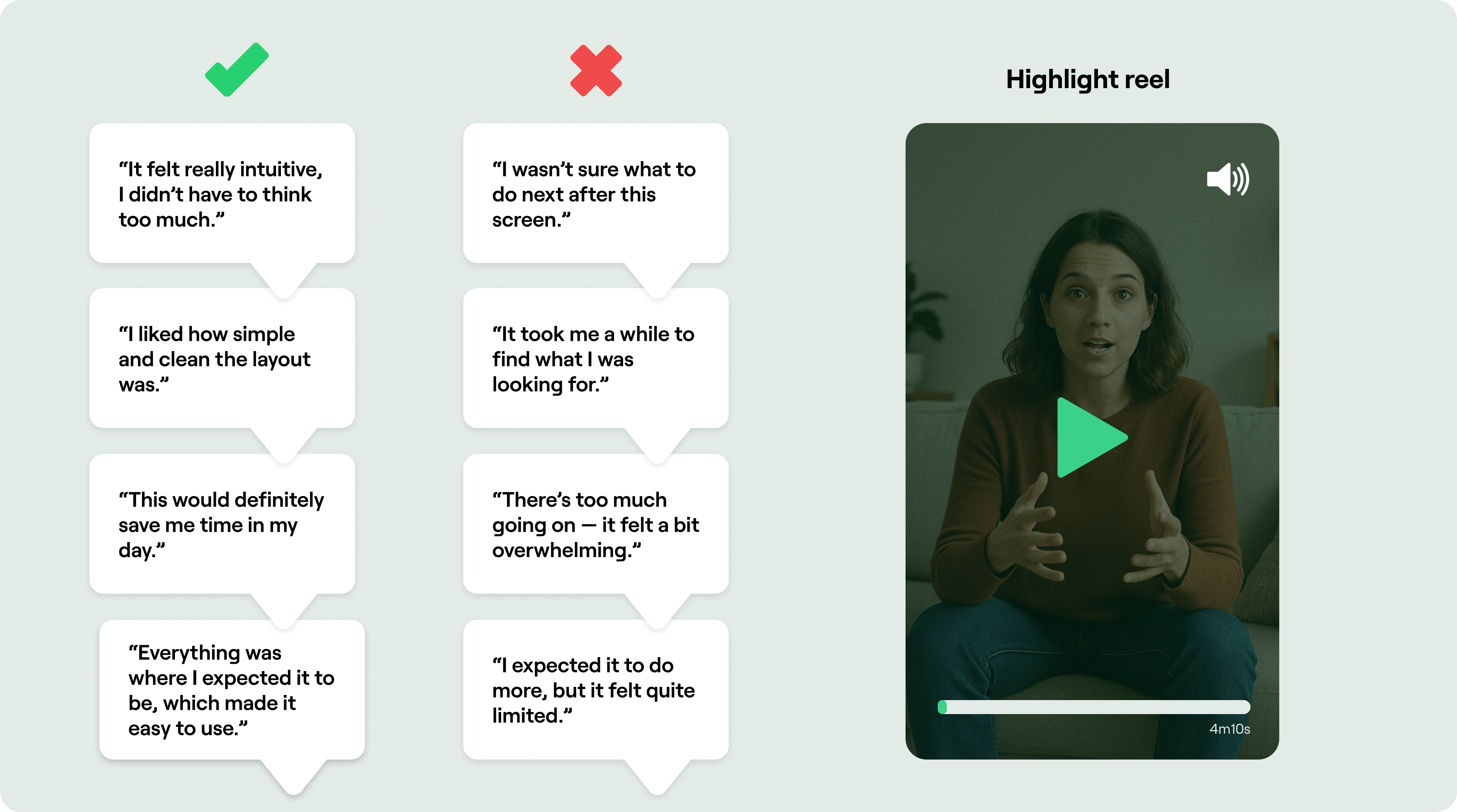

This will result in emotionally driven snippets that you can pepper across your playbacks to enhance your quantitative data and shine a light on the problems your products are facing and the most likely directions to point.

You can pull out comments and present them in speech bubbles in your playbacks, or even better and more powerful, embed powerful short video clips from the conversations. This tends to resonate well, especially with stakeholders further removed from the day to day.

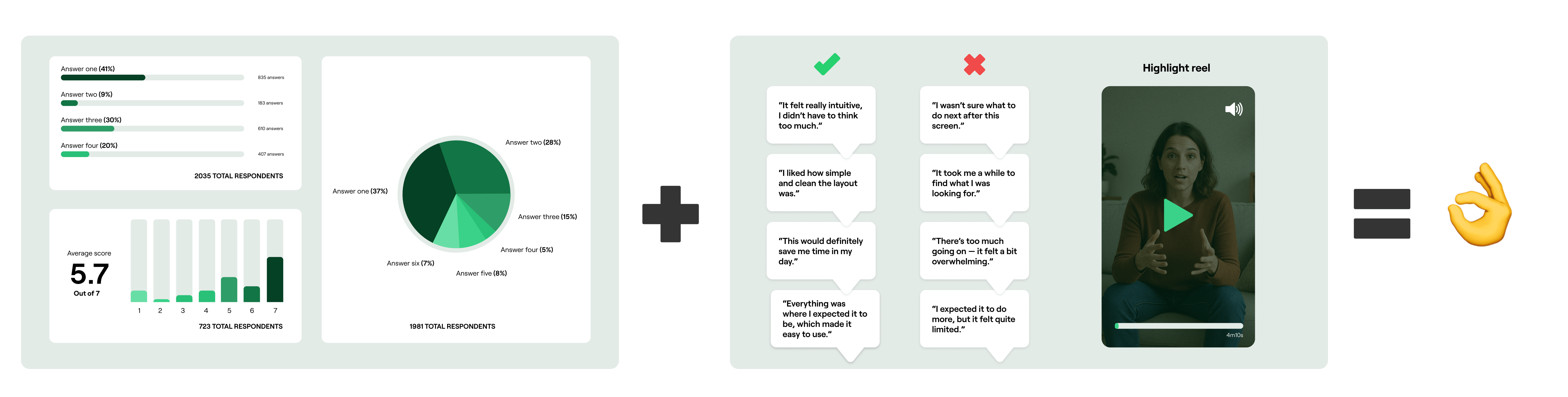

Start blending your research

This blended view is tried and tested (pun intended) to help align people from all across the business on where to go next.

But this type of research shouldn't be the end. It should answer some of the bigger, broader questions and give enough confidence for both you and your HiPPOs to go down the right path, get some code live and lay the foundations for further tactical refinement as you loop back and create a continual discovery and delivery loop.