Should I adopt MCP as part of my API product strategy?

APIs are no longer just integration points, they’re becoming strategic enablers of product wide intelligence.

As AI products become first-class consumers of enterprise data, the traditional model of hardcoded API integrations is showing its limits. APIs now serve not just software engineers integrating APIs into static product workflows, but also intelligent agents that require dynamic, contextual access to data, tools, and workflows.

This evolution raises new questions:

- How do we enable seamless, real-time AI access to our systems, without custom plugins or brittle integrations?

- How do we scale these interactions across a fragmented AI landscape?

The Model Context Protocol (MCP), a key component in the answer to these questions, is a newly formalised specification designed to standardise how AI systems consume, interact with, and act on APIs.

In this article, we’ll explore how MCP brings your API Products closer to the AI landscape, reduces integration overhead, and offers a future-proof strategy for organisations building in the AI era.

API x MCP

APIs are the mechanisms that facilitate integration between your first- and third-party data and the vast landscape of AI products. MCP enables AI products to use your data as context for their responses, invoke proprietary functionality on your (or a third party’s) platform. Having a mechanism to provide more context to an AI product goes some way to lowering (but not eliminating) the risk of hallucinated or incorrect responses.

As the AI ecosystem grows more complex, with an expanding surface area of foundation models, fine-tuned variants, vector databases, and Retrieval-Augmented Generation (RAG) pipelines, so too does the integration burden.

The traditional bounded context approach through endpoints-per-use-case quickly becomes insufficient when your API clients have limited integration capabilities and must operate across a large, varied surface area. These constraints aren’t just technical, they’re strategic. As the surface area of AI products and services continues to expand, we need an approach that enables broad interoperability between diverse AI solutions and a wide range of API products.

MCP aims to reduce AI integration overhead by standardising how AI products interact with your first- and third-party data. Given the unlikely consolidation of the AI ecosystem and providers in the near term, we need effective ways to shield developers and APIs from the complexity of this fragmented landscape.

MCP, now formalized as a v1.0 specification but still under active refinement, offers a consistent standard for integrating AI products with your data through a unified interface, shared semantics, and common usage patterns, enabling faster onboarding, stronger governance, and greater AI interoperability across your ecosystem.

As you shape your API product strategy for the AI era, it may be time to consider adopting MCP as an open integration standard alongside your traditional gateways and producer interfaces.

What exactly is MCP?

MCP is an integration layer that enables AI clients and applications to connect to data and execute functionality that resides outside the language model itself.

In more detail, the Model Context Protocol (MCP) breaks the static integration paradigm of traditional client-server API models. It introduces a dynamic layer between large language models (LLMs) and external tools, enabling runtime discoverability and execution without requiring tightly coupled integrations between the AI application or client and the integration source.

Traditional REST or GraphQL integrations typically rely on pre-negotiated, tightly coupled client relationships. MCP, by contrast, enables a dynamic capability exchange, where the host (e.g., ChatGPT or Claude Desktop) can ask at runtime, “What can you read?” and “What can you do?”

MCP servers become a new vessel for productising your application’s data and integrating it into the AI ecosystem. A single MCP server can expose your data to all compliant models without the need for tightly coupled client integrations, duplicated glue code, or vendor-specific plugins.

At a high level, MCP provides three key benefits:

- Decouples model behaviour from specific tools or systems – allowing AI clients to remain flexible and modular in rapidly evolving environments.

- Enables LLMs to discover APIs with zero configuration and at runtime – eliminating the need for pre-defined integrations and reducing developer overhead.

- Supports interoperability across an expanding landscape of AI agents, models, and tools – fostering seamless integration and collaboration within diverse ecosystems.

This approach is particularly valuable in scenarios involving retrieval, orchestration, or tool augmentation, where model flexibility and runtime composition are critical.

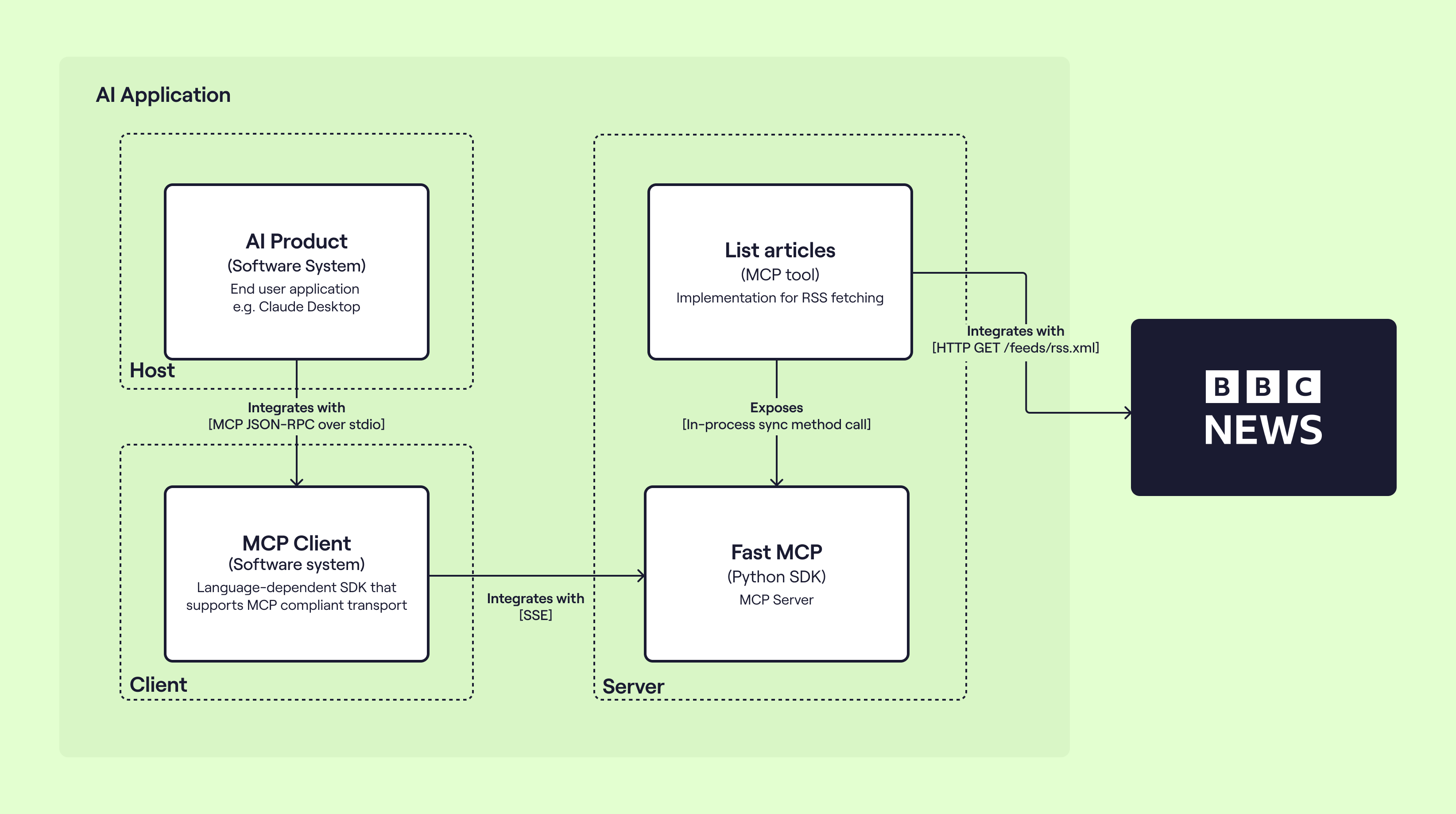

MCP is a client-server protocol comprising three primary components: the Host, the Client, and the Server.

| Role | Responsibility | Example |

| Host | Runs the LLM or agent and requires access to external data or actions. | VS Code, ChatGPT Desktop, custom inference service |

| Client | Communicates using MCP on behalf of the Host and manages authentication, capability negotiation, and streaming. | A TypeScript app capable of connecting to an MCP server via a supported protocol, such as HTTP Server Side Events (SSE) or STDIO |

| Server | Wraps your API and advertises resources, tools and prompts via JSON-RPC methods. | Small wrapper around Google Calendar, CRM, database, file store |

Out of the box, MCP servers support exposing three primitives to MCP hosts:

| Primitive | Description | Example |

| Resources | Read‑only endpoints that stream structured context, such as files, database rows, or logs. | MCP Server reads and returns a Python file back to the MCP Host via a HTTP stream. |

| Tools | Function calls with arguments, where the response conforms to a validatable schema. | MCP Server calls the GitHub API to create a GitHub issue after linting a codebase |

| Prompts | Reusable message templates or multi-turn workflows that are selected by the user. | MCP Server returns a prompt that instructs the LLM on how it should perform a code review. |

Should I adopt MCP as part of my API Product strategy?

APIs are products in their own right, deserving the same strategic planning, user experience design, and lifecycle management as any customer-facing offering. This is especially true as APIs become critical drivers of scalability, developer engagement, and revenue growth, points we discussed in detail in our blog post, your API is a product.

With MCP emerging as a new form of integration standard that enables seamless AI interoperability across API products, here are several compelling reasons to consider adopting MCP as part of your overall API product strategy:

- Reach the AI-native user segment - enabling new inbound usage without the need to build proprietary plugins or SDKs for every emerging AI product. MCP simplifies the process for customers to connect large language models (LLMs) and agent-based AI products to first or third party data, documents, or actions.

- Accelerate customer onboarding – MCP reduces time-to-value for customers looking to embed your data into their AI products. This not only lowers pre-sales effort but also enables greater economies of scale.

- Creating stickiness within users’ existing AI workflows - deep integration into AI tooling increases customer reliance on your platform, reducing churn and elevating perceived value.

- Future proof your AI Data strategy - MCP is on the roadmap of most major AI platforms, including OpenAI, Microsoft, and Google. By adopting MCP, you avoid the need to place bets on non-standard integrations or proprietary frameworks.

- Accelerating Adoption of AI Workflows - Open-sourcing MCP components makes it easier for developers to experiment with AI capabilities using your API products as a substrate (Be sure to complement this with an internal culture of responsible AI development).

- Unlocking New Monetization Pathways - More AI usage often results in more data processed, higher API volumes, and increased engagement, driving organic platform expansion.

Current state of the MCP landscape

The MCP ecosystem has grown rapidly over the past few months. Anthropic initially released support for MCP Server integration in Claude Desktop, and many other AI products have since followed suit, including:

- Claude Desktop - Extend conversations with contextual access to first and third party data and tools right within the Claude Desktop app.

- VS Code - Integrate first and third party data and tools directly into your engineers’ workflows via Visual Studio Code’s Copilot agent mode.

- OpenAI Agents - Provide OpenAI backed agents with dynamic capabilities and live system context through on-demand access to first and third party data and tools.

It has also been announced that both Google and OpenAI are bringing MCP support into their core products. As a result, we expect to see MCP Server support in the ChatGPT desktop application in the coming weeks and months.

Adoption of MCP Servers has also expanded significantly, including platforms such as:

- PayPal - Create and manage invoices.

- Stripe - Create coupons, prices, refunds, products and more.

- Mailgun - Create email campaigns and fetch/visualise send statistics.

Creating an MCP Tool for Claude Desktop

To get a sense of MCP’s power, let’s walk through building an MCP Server and integrating it with Claude Desktop. We will create a tool capable of retrieving articles from BBC News. We’ll leverage the BBC News RSS feed, use FastMCP, a Python library for building MCP Servers, and manage dependencies with uv, a high-performance Python package manager.

When using MCP via Claude Desktop, the Claude Desktop app provides both the host and the client components.

At the time of writing this post, Claude Desktop is by far the easiest route to demonstrating the power of MCP, however, it lacks support for remote MCP servers, resources and other elements of the MCP standard, however we expect this to change rapidly.

If you’d like to skip ahead, we’ve published the source to GitHub.

You’ll need to create a new uv project with fastmcp and feedparser specified as dependencies.

pyproject.toml

[project] name = "mcp-server-demo" version = "0.1.0" requires-python = ">=3.12" dependencies = [ "feedparser>=6.0.11", "mcp[cli]>=1.6.0", ]

Next, we’ll create a FastMCP server along with an MCP Tool capable of fetching and parsing the BBC News RSS XML feed. The FastMCP server will then be served over HTTP using Server-Sent Events (SSE) as the transport protocol.

main.py

import feedparser from mcp.server.fastmcp import FastMCP from mcp.server.sse import SseServerTransport RSS_URL = "https://feeds.bbci.co.uk/news/rss.xml" mcp = FastMCP( name="bbc_news_mcp_server_demo", description="An example MCP Server utilising FastMCP and feedparser to inject BBC articles into Claude Desktop" ) @mcp.tool() async def get_n_article_titles_and_links_from_bbc_news(limit: int = 5) -> str: feed = feedparser.parse(RSS_URL) entries = feed.entries[:limit] return "\n".join(f"{e.title} — {e.link}" for e in entries)

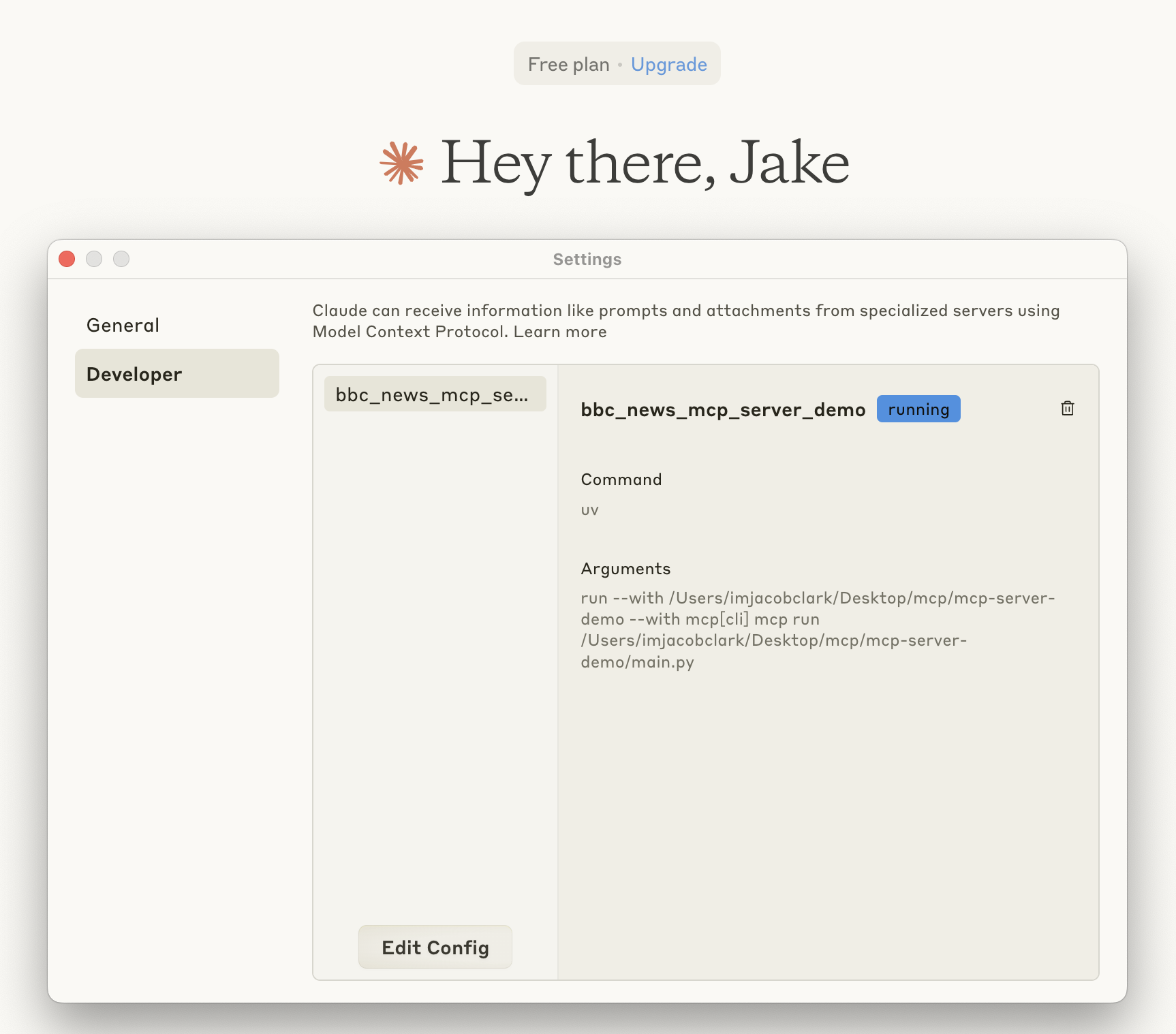

Then, we can install it into Claude Desktop by running uv run mc install which will create a new claude_desktop_config.json entry.

claude_desktop_config.json

{ "mcpServers": { "news_fetch": { "command": "uv", "args": [ "run", "--with", "/path/to/mcp-server-demo/", "--with", "mcp[cli]", "mcp", "run", "/path/to/main.py" ] } } }

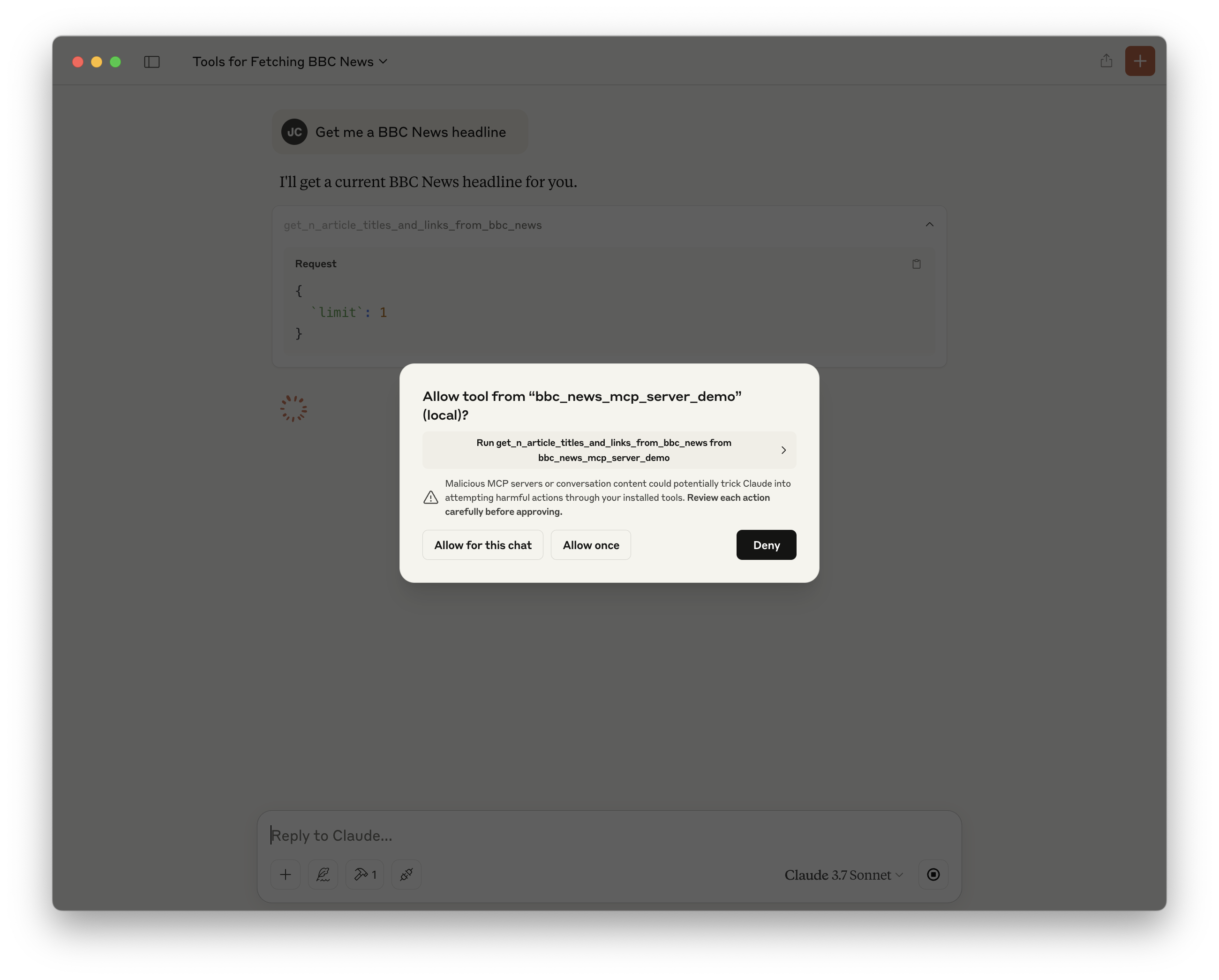

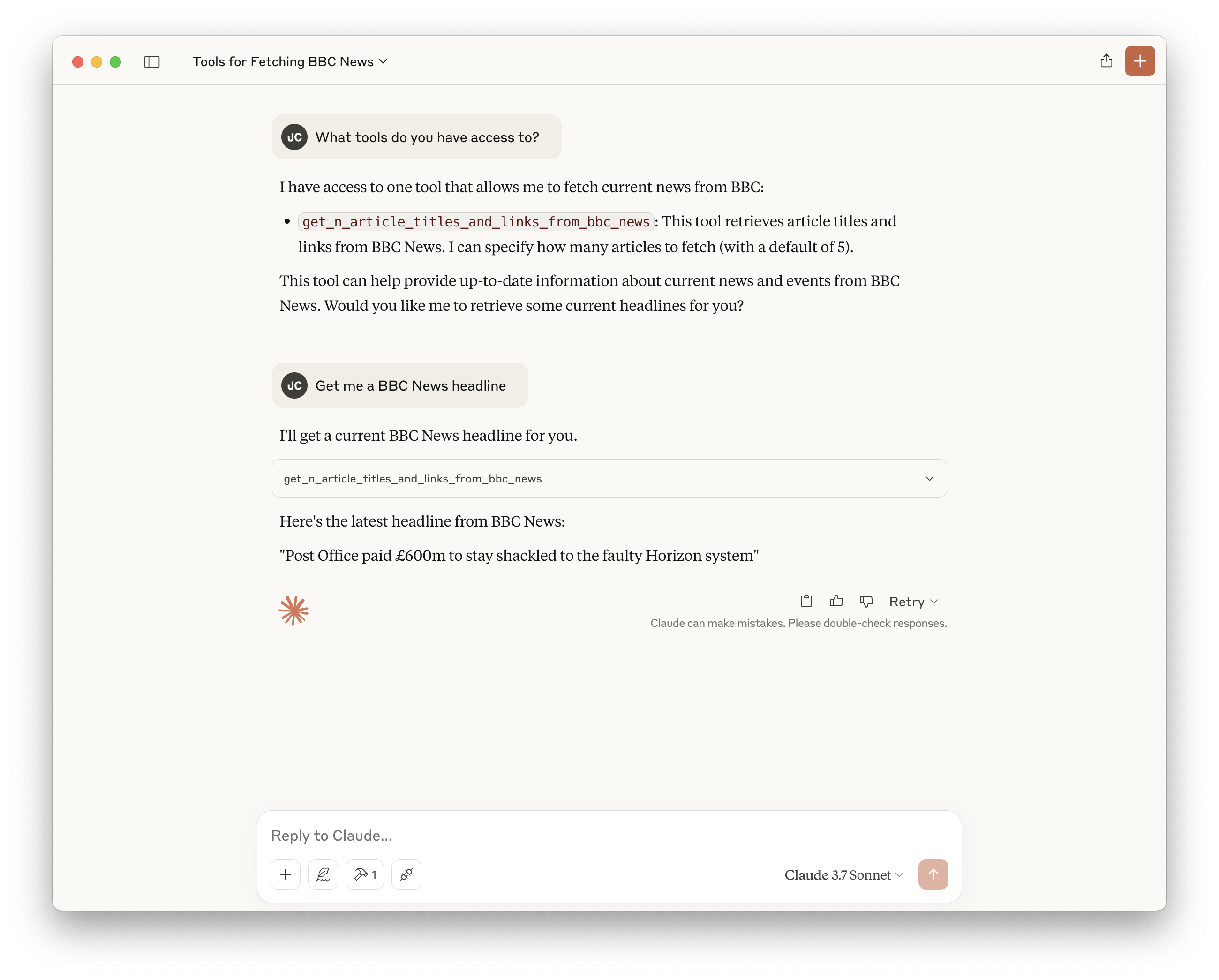

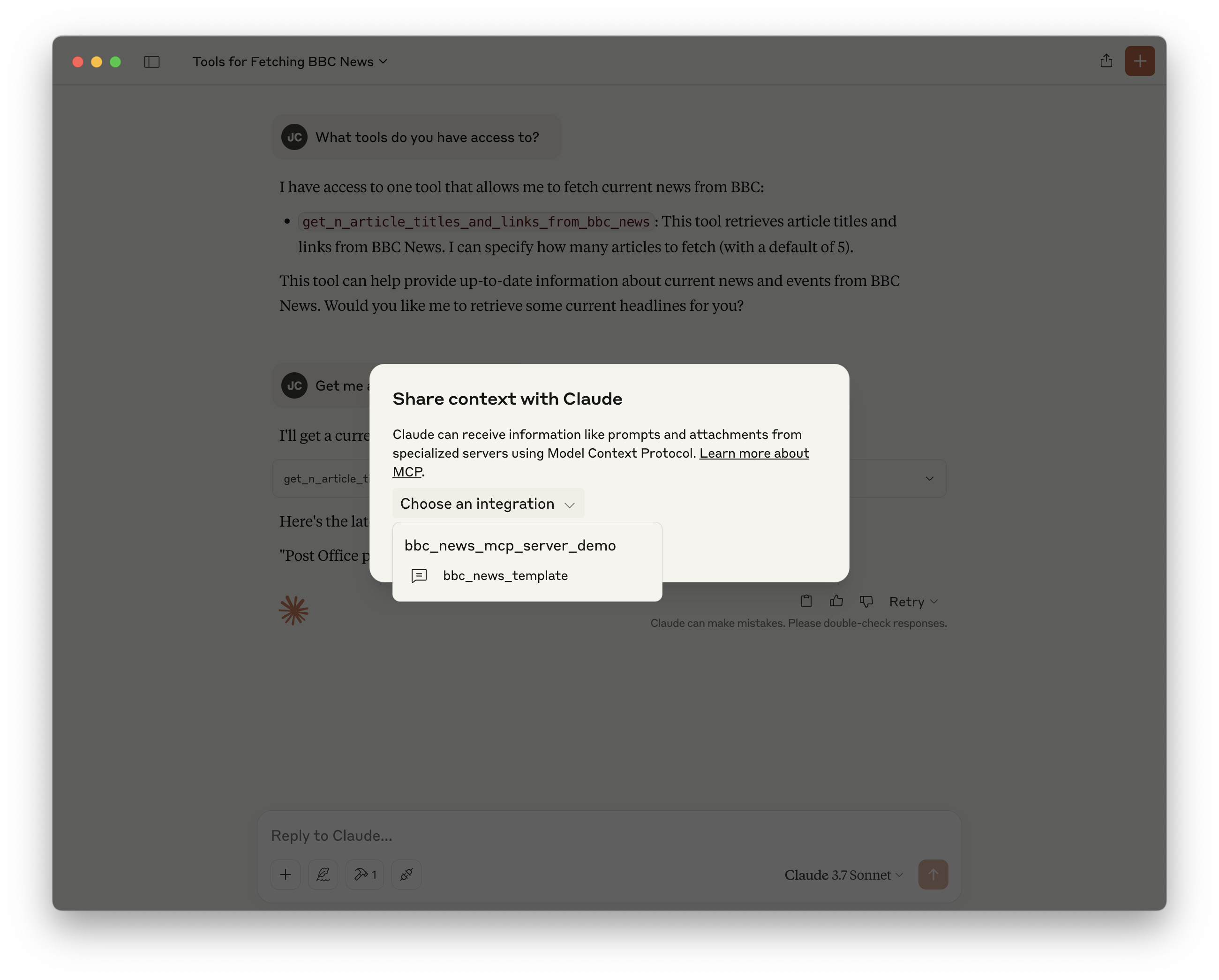

Restart Claude Desktop and prompt it with “Get me a BBC headline.” When Claude requests access, allow the tool to be used.

Once approved, Claude will invoke the MCP client, once the server has responded, it will then return the latest BBC headline as retrieved from the RSS feed.

We can go one step further by specifying a prompt that can be selected within the Claude Desktop app without having to manually write the prompt out each time, let's define an instruction that helps Claude format the response from our tool.

main.py

@mcp.prompt() def bbc_news_template(): """Provides a structured format for displaying BBC News Artcile Titles and Links reports.""" return ( "🌍 Article Title: {title}\n" "🔗 Article Link: {link}°C\n" )

Restart Claude Desktop, before typing your first prompt, click the “Attach from MCP” icon, select your server and the bbc_news_template prompt.

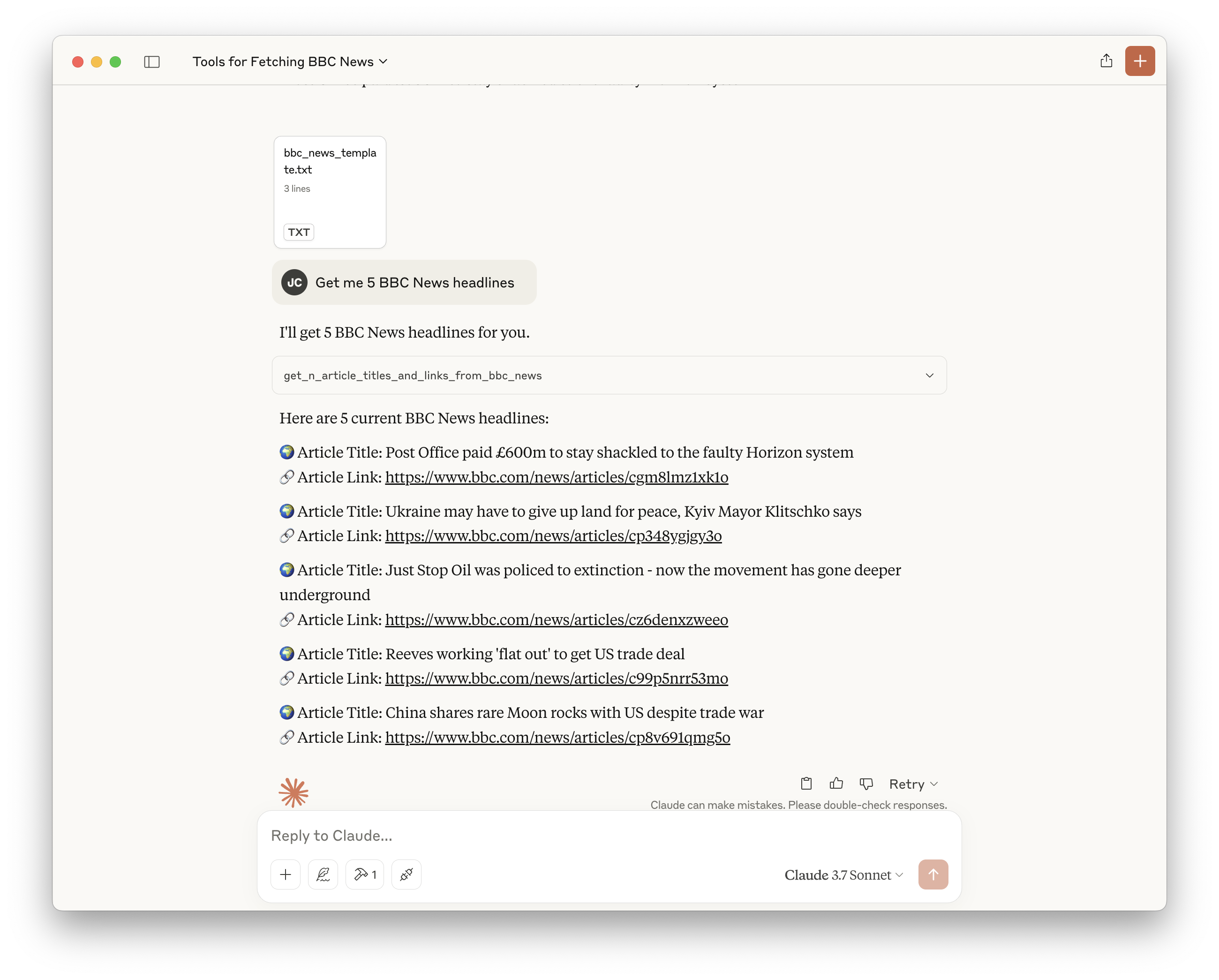

Then we can prompt “Get me 5 BBC News articles” to see both the tool call and the prompt usage!

Conclusion

As APIs evolve from simple interfaces into strategic enablers of platform extensibility, developer productivity, and AI integration, MCP emerges as a timely advancement for modern API product strategies. Rather than replacing REST or GraphQL, MCP serves as an AI-native interoperability layer, abstracting fragmentation across models, agents, and platforms, while delivering runtime flexibility that traditional APIs were never designed to offer.

Adopting MCP enables your API ecosystem to:

- Seamlessly serve both human and AI consumers through a standardised, interoperable interface.

- Accelerate integration velocity by minimising custom development overhead for AI clients.

- Position your platform for emerging AI-native workflows across tools like ChatGPT, Claude Desktop, and Visual Studio Code.

- Future-proof your data and service exposure strategy as LLMs evolve from passive text generators into dynamic integration layers for software orchestration.

Incorporating MCP into your API product strategy is not just a technical consideration, it’s becoming table stakes as AI integration becomes ubiquitous. MCP ensures your APIs remain discoverable, composable, and operational in a rapidly evolving AI ecosystem, without locking you into proprietary integrations or brittle plugin systems.