Serverless 101: An overview with examples

What is serverless?

Serverless as we know it was introduced by AWS in 2014 with the AWS Lambda service. Microsoft Azure and Google Cloud Platform followed suit a few years later.

When we talk about serverless, we're generally talking about a handful of services that run code in the cloud (e.g by AWS, Google Cloud Platform, Azure etc) where the underlying server infrastructure is fully managed, this is often referred to as Infrastructure as a Service or IaaS.

Serverless is being increasingly used by product teams to innovate faster and build applications without the usual operational overhead of managing servers.

With serverless, rather than having your application servers running all of the time, instances of your application spin up when required, and spin back down once they're done serving the requests.

You simply deploy your application (either as a container image or zip archive depending on the service), configure your required memory and CPU limits, and any environment variables and the cloud providers do the rest.

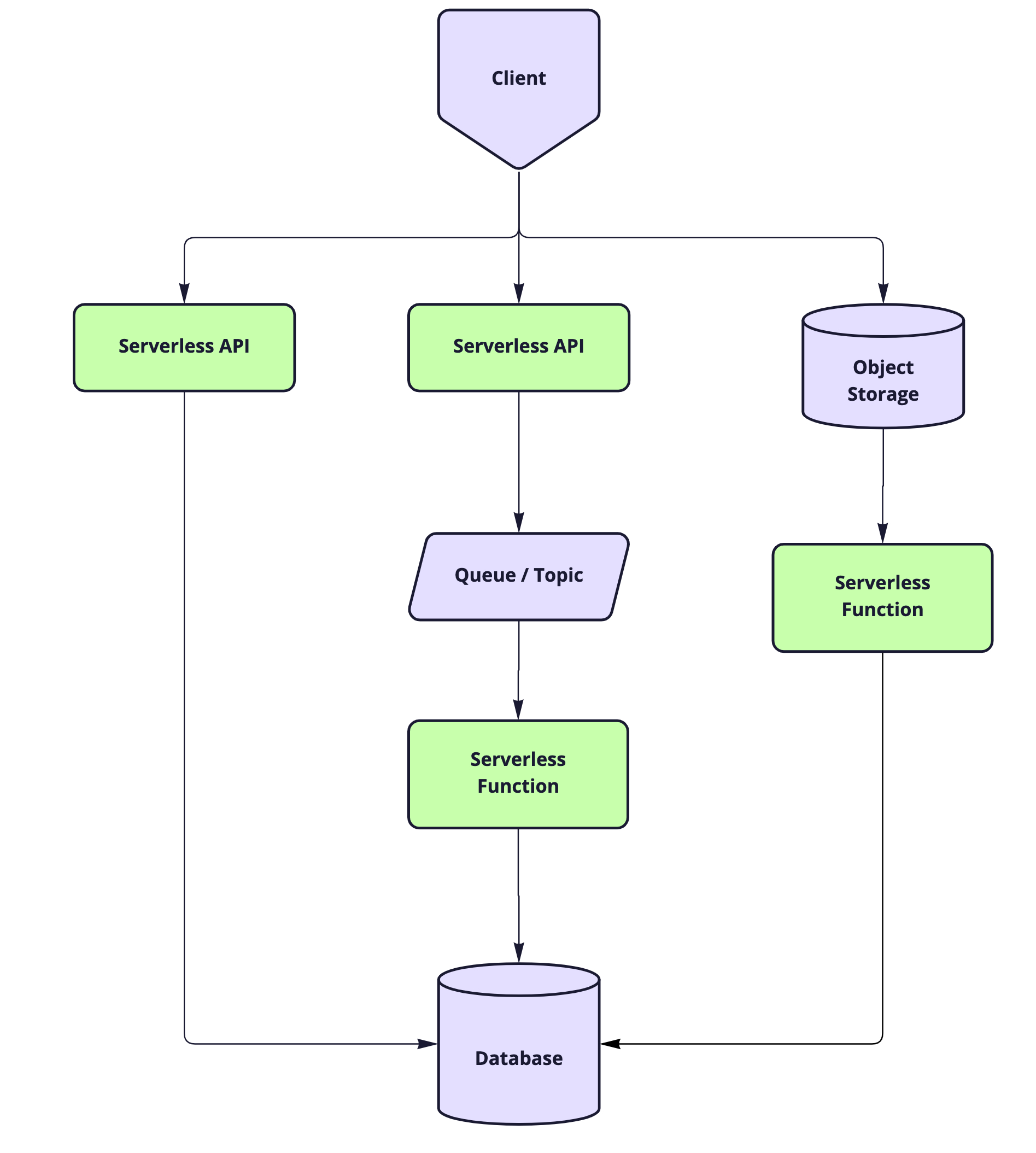

Example use cases

Serverless services can be used to power all sorts of applications including:

- stateless APIs

- asynchronous event-driven tasks

- batch processes

- bulk data processing

Example serverless compute services

Serverless containers are containerised applications which run on a serverless environment

Serverless functions are snippets of code which can be triggered off the back of an event

What problem does serverless solve?

Serverless removes a huge operational burden allowing us to focus more on our applications.

Without serverless, there are several operational tasks required to get our application up and running and keep it healthy.

To begin with, we need to set up the operating system and install basic services such as a web server and monitoring tools.

We need to ensure that our servers are secure and that only authorized people can access them.

We then need to deploy our application to the server, get it up and running, and make sure it's logging and our log files are being rotated to avoid them filling up disks.

We need to make sure our application will restart if it crashes, and will start automatically after a reboot.

When we make a change to our application, we need to deploy it to the server in a way that doesn't impact any open requests.

We need to patch our servers regularly to ensure that any vulnerabilities are fixed, this could involve down time so we might need a maintenance window, if we have multiple servers running instances of our application, we might need to take a batch of them out of service, patch them and put them back in service.

If our application tends to have spikes in traffic, we need to think about pre-emptively scaling our application to ensure it meets demands.

With serverless, we don't need to do any of this.

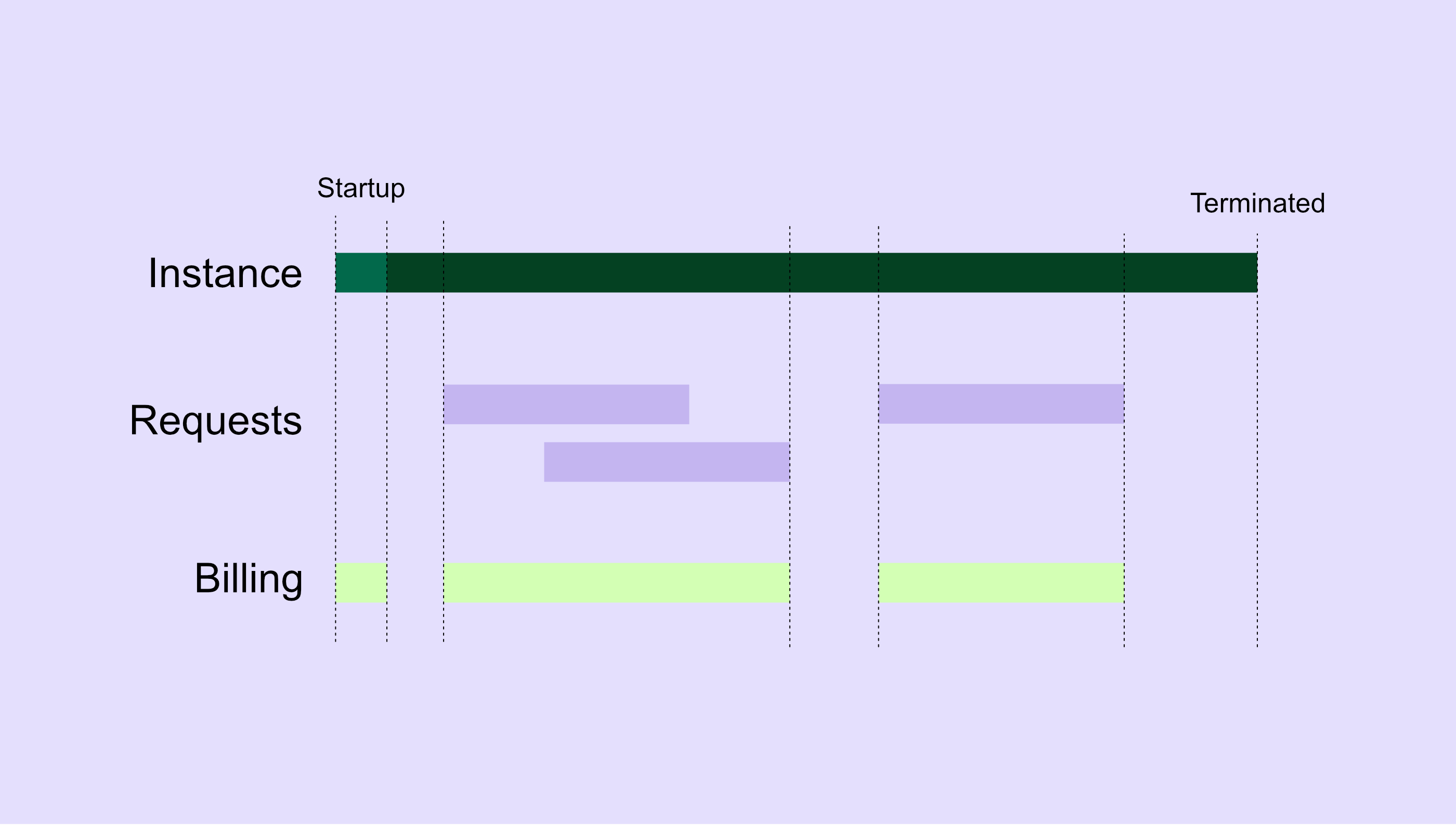

Serverless billing

Here's an example of how Google Cloud Run is billed.

You're only billed for the time spent serving requests, and not for idle time. The

serverless services will scale up your application to meet demand and scale back down

once demand subsides.

Things to consider

There are a couple of things to consider before choosing a serverless option.

DevOps

Regardless of the current shape of the organisation, removing the burden of infrastructure management helps support DevOps principles, by allowing teams to innovate faster and removing hand-offs to Ops.

Cold starts

With a non-serverless long-running application, your application is ready and waiting to serve traffic, however with serverless, if you have no idle or active instances (or not enough to meet demand) the cloud platform has to download your container or source code and wait for your application to become available before it can pass any traffic to it. This is known as a cold start.

If a low request latency is important, you can still choose serverless, but you will need to optimise your application start-up time and you can also choose to reserve compute or have a minimum number of instances running to mitigate against the cold start time.

Stateless

With serverless, you can’t rely on memory persisting between invocations, any required state should be stored elsewhere. That being said, you can benefit from lazy-loading and caching expensive processes between requests. For instance, if connecting to a database takes half a second, you can keep the connection open between requests and if the same instance serves multiple requests subsequent requests won't need to connect.

CPU throttling

When an instance has finished serving requests, although the instance sticks around a little bit in an idle state, the resources of the instance usually get throttled down to a bare minimum. This means you should avoid running logic after the response has been sent.

I've seen examples of this where engineers have made the mistake of running asynchronous tasks which complete after the response has been sent, the asynchronous tasks had error handling to check whether they were successful or not, however, because resources were throttled, not only did the task not succeed but the error handling didn't work either so the team were non-the-wiser that the code wasn't running.

Summary

In summary, I'm a big fan of serverless, it solves a whole bunch of problems, makes it easier to decouple your services and allows you to spend more time focussing on your application.